这两天想着锻炼一下自己的编程能力,所以想结合实际情景写出一个python脚本,这个实际场景就是在平时进行渗透测试的时候,我们首先会做信息的搜集,这块肯定就少不了子域名的搜集。

子域名的搜集工具网上也有很多,国外开源的也有很多,这里我结合我自身的需求写了个探测脚本,这个脚本主要有两个功能:

1.子域名的搜集

2.子域名存活探测

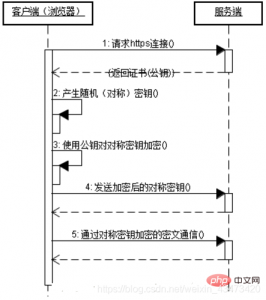

先讲第一个功能,子域名搜集我这里没有调用国外网站的接口,像国外的vt、threatcrowd等,这些本身都有子域名的搜集功能,我也都申请了api,不过还没批下来,,后续再改进!这里我主要用的是国内的接口:百度、站长之家、站长帮手网。同时我采用的是正则匹配来匹配出所有子域名,这相比一些子域名爆破省了不少时间,在真实情景中,众测就是拼的手快!

第二个功能:子域名存活的探测。我们平时在利用工具来进行子域名的搜集时,会出现一大堆已经关闭的域名,也就是我们访问不了,并且有可能是nginx反向代理服务器等等,这些子域名在渗透时出现的危害不如实际业务页面来的大,因此我这里对所有子域名进行了网站状态码的检测,只有为200时才输出,同时返回网站的标题,因为有的网站我们从标题中就能看到该网站的实际业务,在一定程度上也是为了帮助我们快速整理企业所提供的的业务服务。

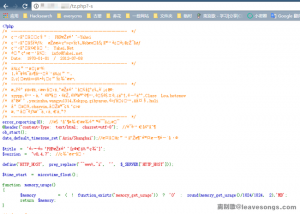

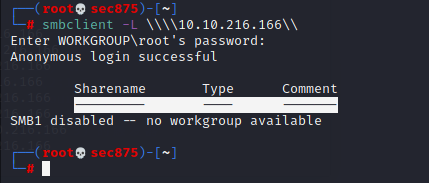

先给出几张测试截图

![图片[1]-子域名探测 v1.0-安全小百科](http://aqxbk.com/wp-content/uploads/2021/08/20210816155615-69.jpg)

![图片[2]-子域名探测 v1.0-安全小百科](http://aqxbk.com/wp-content/uploads/2021/08/20210816155615-57.jpg)

下面给出源码(python 2.7)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

|

#-*-coding:utf-8-*-

import requests

import re

import time

import threading

import random

requests.packages.urllib3.disable_warnings()

def getHeaders():

”‘

Random-agent

‘”

user_agent = [‘Mozilla/5.0 (Windows; U; Win98; en-US; rv:1.8.1) Gecko/20061010 Firefox/2.0’,

‘Mozilla/5.0 (Windows; U; Windows NT 5.0; en-US) AppleWebKit/532.0 (KHTML, like Gecko) Chrome/3.0.195.6 Safari/532.0’,

‘Mozilla/5.0 (Windows; U; Windows NT 5.1 ; x64; en-US; rv:1.9.1b2pre) Gecko/20081026 Firefox/3.1b2pre’,

‘Opera/10.60 (Windows NT 5.1; U; zh-cn) Presto/2.6.30 Version/10.60’,‘Opera/8.01 (J2ME/MIDP; Opera Mini/2.0.4062; en; U; ssr)’,

‘Mozilla/5.0 (Windows; U; Windows NT 5.1; ; rv:1.9.0.14) Gecko/2009082707 Firefox/3.0.14’,

‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.106 Safari/537.36’,

‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.133 Safari/537.36’,

‘Mozilla/5.0 (Windows; U; Windows NT 6.0; fr; rv:1.9.2.4) Gecko/20100523 Firefox/3.6.4 ( .NET CLR 3.5.30729)’,

‘Mozilla/5.0 (Windows; U; Windows NT 6.0; fr-FR) AppleWebKit/528.16 (KHTML, like Gecko) Version/4.0 Safari/528.16’,

‘Mozilla/5.0 (Windows; U; Windows NT 6.0; fr-FR) AppleWebKit/533.18.1 (KHTML, like Gecko) Version/5.0.2 Safari/533.18.5’]

UA = random.choice(user_agent)

headers = {

‘Accept’:‘text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8’,‘User-Agent’:UA,‘Upgrade-Insecure-Requests’:‘1’,‘Connection’:‘keep-alive’,‘Cache-Control’:‘max-age=0’,

‘Accept-Encoding’:‘gzip, deflate, sdch’,‘Accept-Language’:‘zh-CN,zh;q=0.8’,‘Cookie’:”}

return headers

def baidu_site(key_domain):

”‘

Get domains from Baidu

‘”

headers = getHeaders()

baidu_domains,check = [],[]

baidu_url = ‘https://www.baidu.com/s?ie=UTF-8&wd=site:{}’.format(key_domain)

try:

r = requests.get(url=baidu_url,headers=headers,timeout=10,verify=False).content

if ‘class=”nors”‘ not in r:# Check first

for page in xrange(0,10):# max page_number

pn = page * 10

newurl = ‘https://www.baidu.com/s?ie=UTF-8&wd=site:{}&pn={}&oq=site:{}’.format(key_domain,pn,key_domain)

keys = requests.get(url=newurl,headers=headers,timeout=10,verify=False).content

flags = re.findall(r‘style=”text-decoration:none;”>(.*?)%s.*?</a><div class=”c-tools”‘%key_domain,keys)

check_flag = re.findall(r‘class=”(.*?)”‘,keys)

for flag in flags:

domain_handle = flag.replace(‘https://’,”).replace(‘http://’,”)

if domain_handle not in check and domain_handle != ”:

check.append(domain_handle)

domain_flag = domain_handle + key_domain

print ‘[+] Get baidu site:domain > ‘ + domain_flag

baidu_domains.append(domain_flag)

if len(check_flag) < 2:

return baidu_domains

else:

print ‘[!] baidu site:domain no result’

return baidu_domains

except Exception,e:

print e

pass

return baidu_domains

def chinaz_site(key_domain):

”‘

Get domains from chinaz

‘”

headers=getHeaders()

chinaz_site=[]

for page in xrange(1,4):

chinaz_url=‘http://tool.chinaz.com/subdomain/?domain={0}&page={1}’.format(key_domain,page)

s=requests.get(url=chinaz_url,headers=headers,verify=False,timeout=20)

chinaz_content=re.findall(‘http://(.*?).%s’%key_domain,s.content)

for i in chinaz_content:

if key_domain not in i:

chinaz_site.append(i+‘.’+key_domain)

print ‘[+] Get Chinaz site:domain > ‘ + i+‘.’+key_domain

return chinaz_site

def ilinks_site(key_domain):

”‘

Get domains from i.links

‘”

headers=getHeaders()

ilinks_site=[]

ilinks_url=‘http://i.links.cn/subdomain/’

postdata= {

‘domain’:‘%s’%key_domain,

‘b2’:1,

‘b3’:1,

‘b4’:1,

}

s=requests.post(url=ilinks_url,data=postdata,headers=headers,timeout=10)

ilinks_content=re.findall(‘http://(.*?).%s’%key_domain,s.content)

ilinks_content=list(set(ilinks_content))

for i in ilinks_content:

if key_domain not in i :

ilinks_site.append(i+‘.’+key_domain)

print ‘[+] Get ilinks site:domain > ‘ + i+‘.’+key_domain

return ilinks_site

def Subdomain(url):

”‘

Summary the domains

‘”

subdomain=[]

for baidu_domain in baidu_site(url):

subdomain.append(baidu_domain)

for chinaz_domain in chinaz_site(url):

subdomain.append(chinaz_domain)

for ilinks_domain in ilinks_site(url):

subdomain.append(ilinks_domain)

subdomain=list(set(subdomain))

return subdomain

def info(url):

”‘

Get information from domains

‘”

s=requests.session()

headers=getHeaders()

url=“http://”+url

s=requests.get(url,headers=headers,timeout=5)

s.encoding=‘utf-8’

www_status=s.status_code

www_content=s.text

if www_status == 200:

try:

title=re.findall(‘<title>.*?</title>’,www_content)[0]

except:

title=www_content[(www_content.find(‘<title>’))+7:www_content.find(‘</title>’)]

title=title.replace(“<title>”,“”)

title=title.replace(“</title>”,” “)

title=title.replace(‘n’,”)

www_title=title

print ‘%-40s %-10s %-20s’ % (url,www_status,www_title.strip()[:50])

def live(url):

try:

info(url)

except Exception,e:

pass

def main():

print ‘please input url, such as baidu.com ‘

”‘

examples:baidu.com

Without http:// or https://

‘”

url=raw_input(‘>’)

url_list=Subdomain(url)

print ‘—————————————————-‘

print ‘[+] There is [ %s ] domains’%len(url_list)

print ‘—————————————————-‘

”‘

Get the num of domains

‘”

try:

for i in xrange(0,len(url_list)):

task=threading.Thread(target=live,args=([url_list[i]]))

task.start()

task.join()

except KeyboardInterrupt:

”‘

ctrl+c — quit

‘”

print ”

print ‘exiting…’

pass

if __name__==‘__main__’:

main()

|

题目链接:传送门 总的来说,YIT-CTF的图片隐写类不是很难,题目量也不是很大,都是很基础的一些隐写题目。 1.小心心 flag说:把我的小心心给你~~ 先把图片下下来,看图片没什么猫腻。。用winhex查看下图片里是否有隐藏信息 在图片的最后我们发现了隐藏…

© 版权声明

文章版权归作者所有,未经允许请勿转载。

THE END

喜欢就支持一下吧

请登录后发表评论

注册